How to Backup Universal Analytics Data Easily

On July 1st, 2024 Google will finish the process of replacing Universal Analytics with Google Analytics 4. All UA data will be deleted, without a backup you’ll lose this information for good. In this article, we’ll explore why UA data is important. We’ll also show you how to back up universal analytics data with a step-by-step guide.

This article is a webinar write-up for the GA4ward talk by Ameet Wadhwani, Product Manager of Analytics Canvas. You can find his slides here and a recording of his talk below:

Why back up your Universal Analytics data?

There is no chance that Google will extend the deadline beyond July 1st. Once your UA data is gone, it’s gone. Google is telling you loud and clear that it’s time to back up.

But why should you? Here are the top three reasons for backing up:

- Historical Data Analysis – We cannot analyse past trends without historical data. This means we can’t look at how websites and campaigns have performed. We’re also unable to look at the impact of seasonal changes. Having historical data located in one place is a huge benefit for analysts.

- Policy – Businesses in many industries, from government to healthcare, need UA data. Agencies, in particular, that have been paid for performance, need data as evidence.

- Blending with GA4 – Stakeholders who aren’t up to speed with UA and GA4 don’t want to see a new set of reports. They want continuity in reporting and need to be gradually brought to GA4.

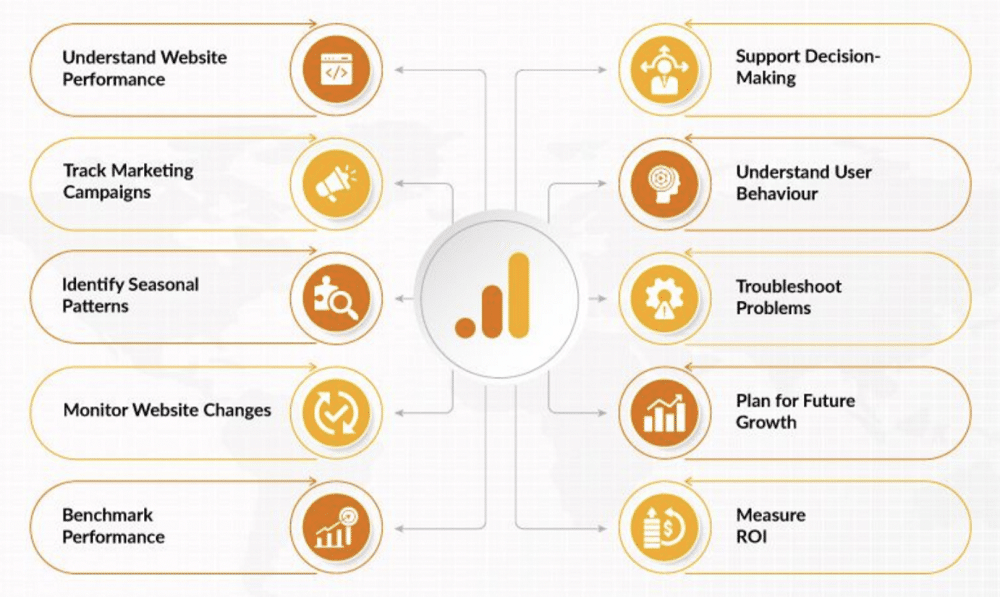

If you’re still unconvinced, further reasons for backing up UA data can be found in the image below.

What to backup

Prioritise the following areas.

- Your existing dashboards and reports – You’ll probably currently be doing most of your historical analysis within Google Analytics dashboards. They’re also likely what your stakeholders are used to seeing.

- The most relevant metrics and dimensions – These will be essential if you’re considering blending your data.

- Anything you routinely review in the web interface – Ask your stakeholders which custom reports are most important to them.

- Anything you may want to analyse again in the future – For example, device, ads, site speed, etc. Take a survey of the key data that has been relevant in recent years, and dial this into your backup.

- Anything else mandated by your organisation or client – Organizations or clients may have specific expectations about what they want to see backed up. Make sure these requirements are met in your backup.

How far back should you go?

A good base point is to go back as far as your last major website backup. If you’ve significantly changed your site, then data before this point will not be relevant.

Think about your budget. There are plenty of free solutions available, you could also opt for or low-cost choice, such as Analytics Canvas. Free solutions are less effective. Even with a lower-priced solution, the more you back up the more you’ll be paying.

Data retention settings

It’s important to consider your data retention settings. Someone in your organisation may have put a data policy program in place. If this is the case, you will only be able to get a certain amount of data.

Once data retention settings are activated, they will be applied on a rolling basis, so data will already be gone.

To deactivate this setting go to the admin section of a Universal Analytics property, choose “Tracking Info” > “Data Retention,”. From here, you can find settings that allow you to specify how long Google Analytics retains user and event data before automatically deleting it. From the menu choose ‘do not automatically expire’.

Other backup considerations

Audit your backup

Under Admin choose Property > Custom Definitions. From here, check for Custom Dimensions and Metrics. If they are used, determine what they are and which tables or reports should contain them.

Audit for goals

Not everyone used all 20 Goals in UA, this could be a simple way to scale back your backup. To audit for Goals choose Admin > View > Goals, From here, check for Goals to determine which Goals to include in the backup.

Audit for events

Some people have defined a lot of events within their workloads. Make sure you’re collecting the right dimensions and metrics alongside the events on your site. To carry out this audit go to Admin> Events > Overview, and check for events. Discuss the use of events with stakeholders to understand the tagging strategy and how to create valid event reports.

Audit for demographic and interest reports

Audience and demographic reports aren’t enabled for all sites. If they are enabled, they can be viewed under Reports > Audience > Demographics > Overview. Oddly enough, the more dimensions you add to the table, the fewer results you’ll get. This is because Google is attempting to anonymize data and ensure that individuals aren’t identifiable.

Audit for segments

As many organisations carry out a lot of segmentations this audit is critical. Segments can be found under Admin > View > Segments. It’s also asking stakeholders if they applied segments to their reports.

Three options for backing up UA data

Google has provided us with a few options for backing up our UA data. The first is the Web UI – from here you download your reports individually as PDFs or Sheets files. This option is way too time-consuming.

The second option is to use the BigQuery export. This option is only available to Google 360 customers and is a nested model. You’ll need a strong knowledge of SQL to complete this successfully.

The last option is to use the API alongside commercial tools. Google has also offered a solution that connects to the API via Google Sheets. There is a long list of challenges associated with reporting against this API. These include:

- There is no “download all”.

- Sampling (500K+ sessions).

- Report Query Limiting (50K+ rows).

- Max 9 dimensions + 10 metrics per query.

- Partitioning on Unique metrics (USERS).

- Limit of 100k rows per request.

- Some dimensions return no data (demographics).

- Some combinations of Events exclude data.

- Inclusion of custom dimensions limits results.

- Some valid queries will simply not return data!

- “Limiting dimensions” restrict sessions in results.

- Quota limits. API errors! etc. etc.

The Analytics Canvas Solution

With little time to back up our data, the only realistic option is to use an off-the-shelf solution. Analytics Canvas has been building an engine for the past ten years. This has been designed specifically to extract UA data scales across large sites.

- Pre-built backup data model.

- Eliminate sampling + RQL.

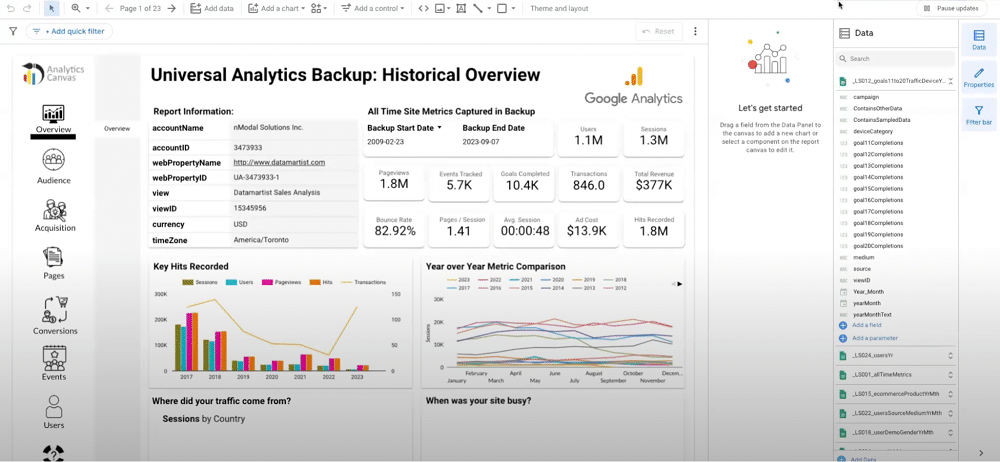

- Access to a 20-page Looker Studio Report.

- Get the full history from unlimited Views

- Publish to BigQuery, Sheets, Excel + CSV

- Most affordable pricing in the industry.

How to backup UA data in Canvas

Let’s look at how to back up Universal Analytics data in Canvas step-by-step.

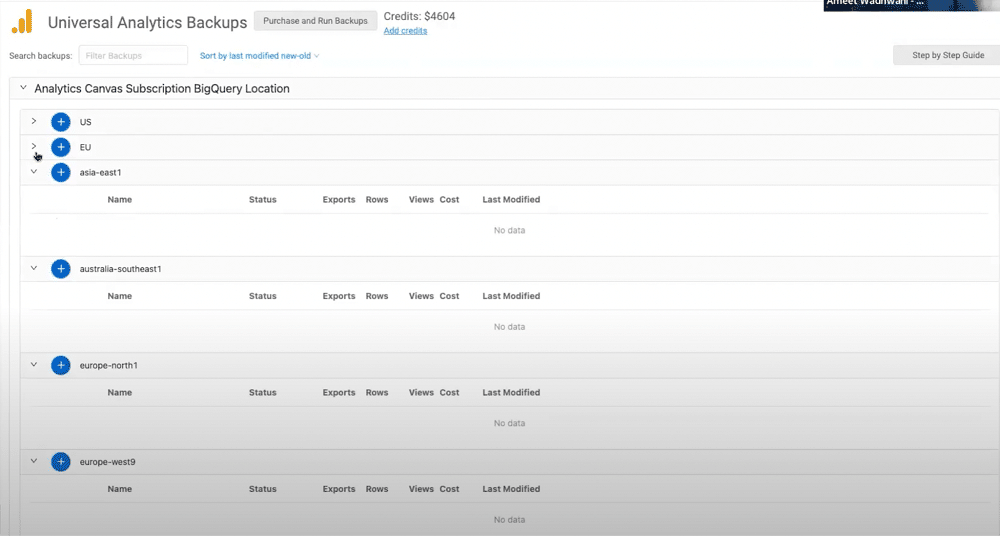

Step 1 – Choose a backup location

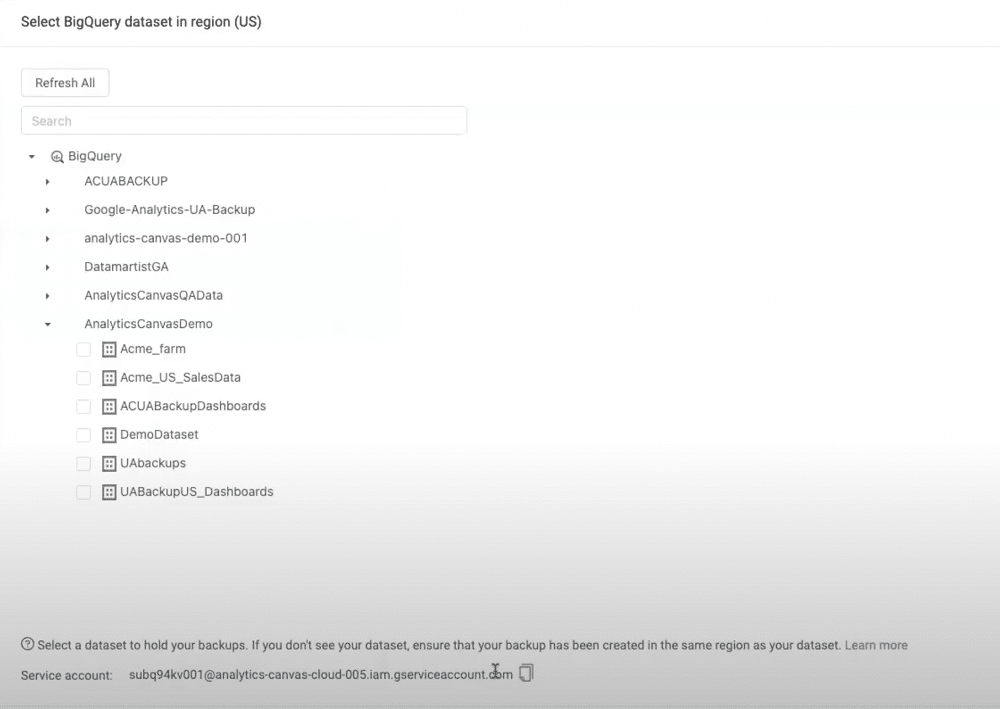

From the UA backups menu in Canvas, you can back up to any data location that BigQuery supports. Choose a location that makes sense to you and build your backup there.

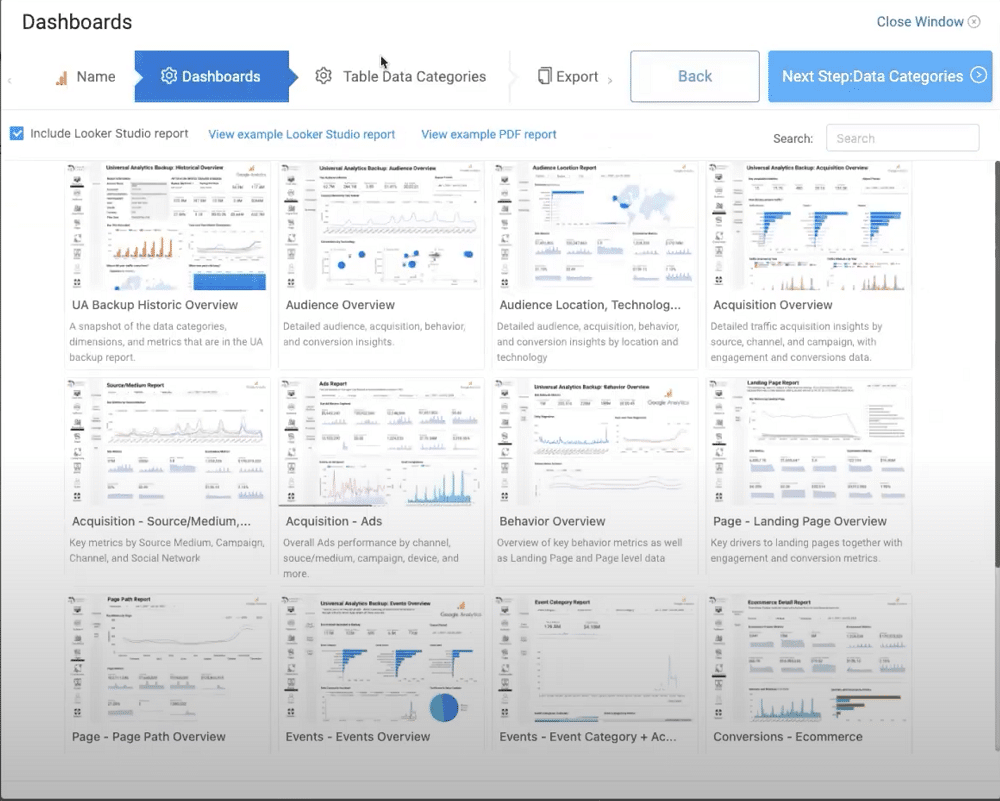

Step 2 – Name your back up and choose your dashboards

Upon choosing a destination for your data, you’ll be prompted to name your backup. You’ll also be asked whether you wish to keep your dashboards. To support your decision, Canvas allows you to preview these through a PDF or via BigQuery.

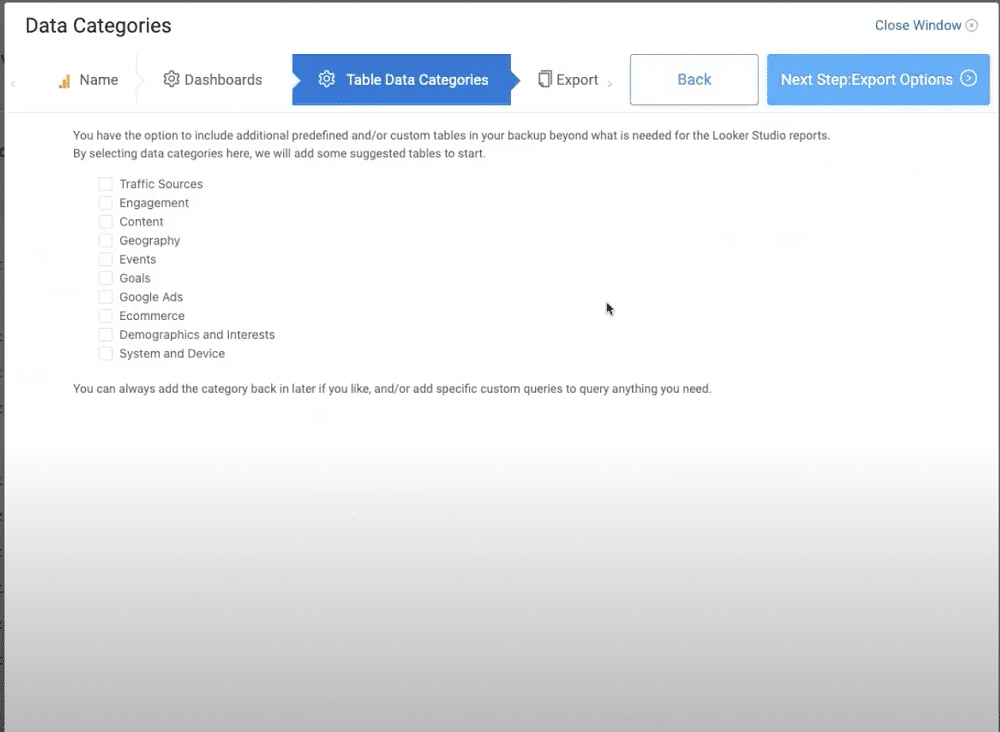

Step 3 – Pick a data category

Next, you must choose a data category modelled from the UA reporting API. You can select one or more categories. After selection, the tool will add default tables modelled from the UA web interface.

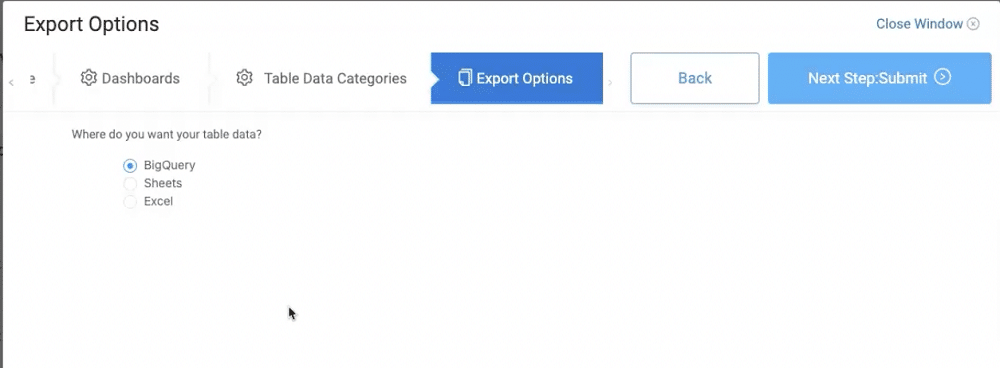

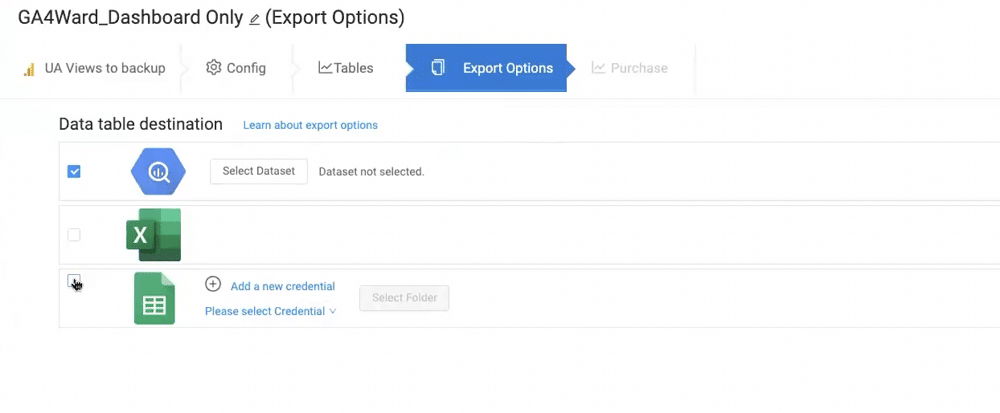

Step 4 – Send your backup to BigQuery, Google Sheets, or Excel

You can now choose either BigQuery or Google Sheets as a destination for your data. If you select BigQuery you will receive a much more detailed backup.

If you choose Sheets or Excel you will receive a lower level of detail. This is because there are hard limits within Sheets and Excel about how much data can be added to spreadsheets or tables.

Note: If you choose Excel you won’t have access to dashboards.

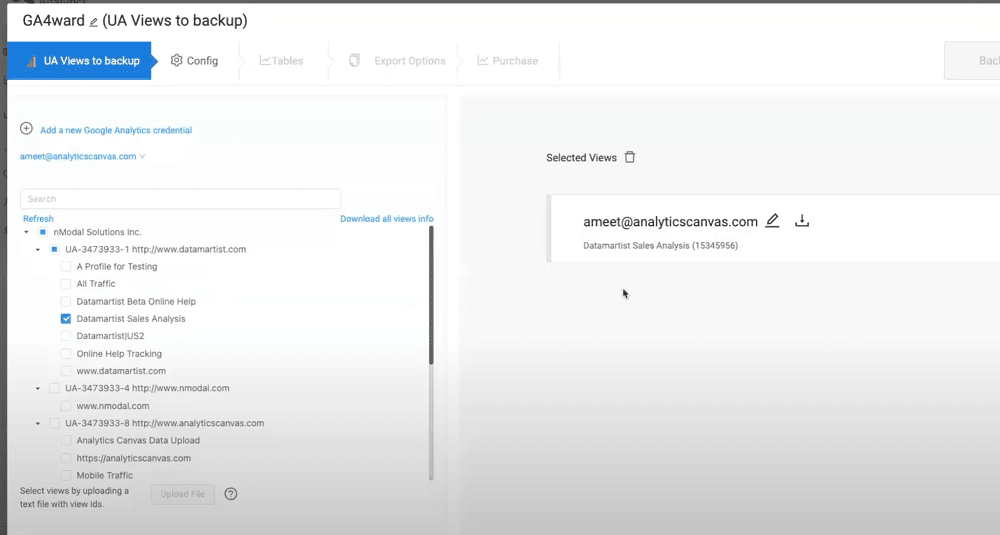

Step 5 – Select views

Next, you choose how many views you wish to back up (if you’ve chosen visualisations, you can only choose one view).

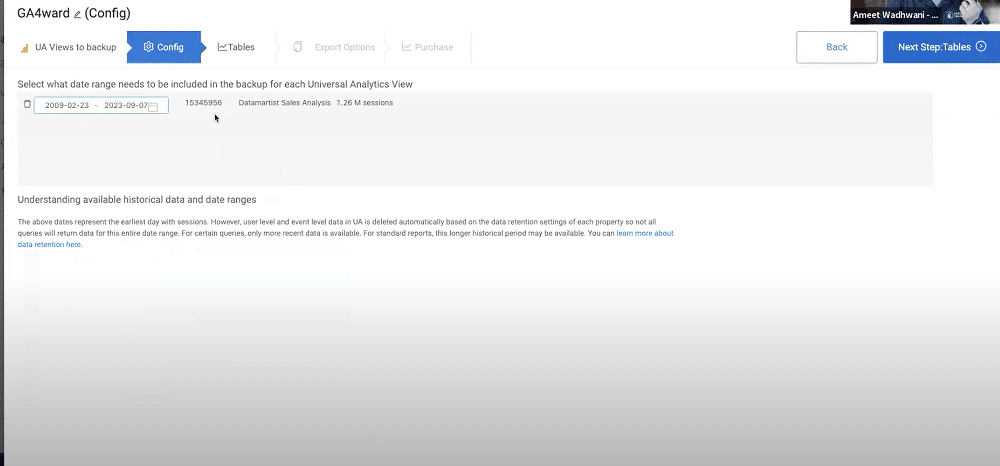

Step 6 – Define a time frame

The property is now scanned and you will be told how much data is available. The more lengthy the timeframe that you choose, the higher the cost, and the longer the duration of the backup.

Step 7 – Receive an estimate

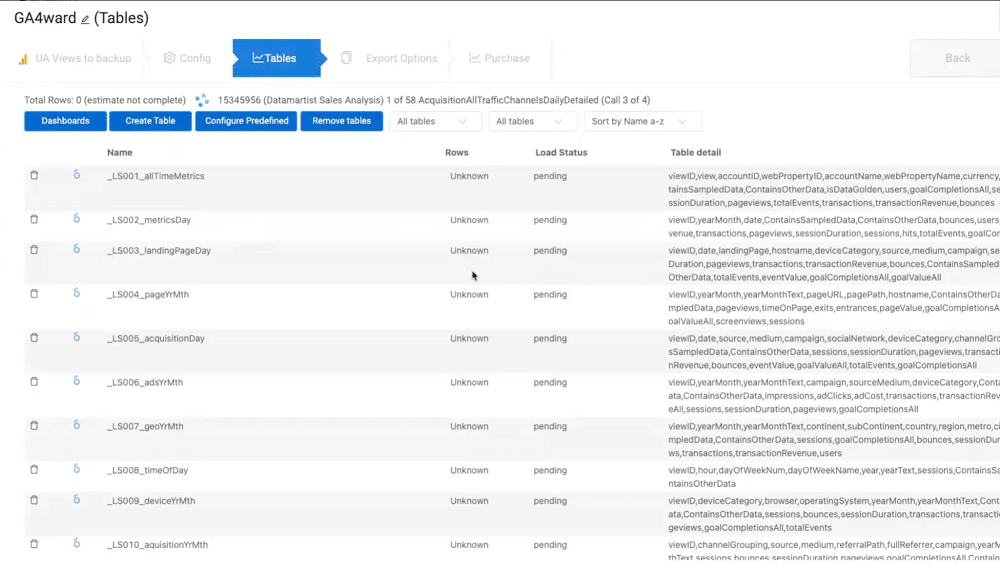

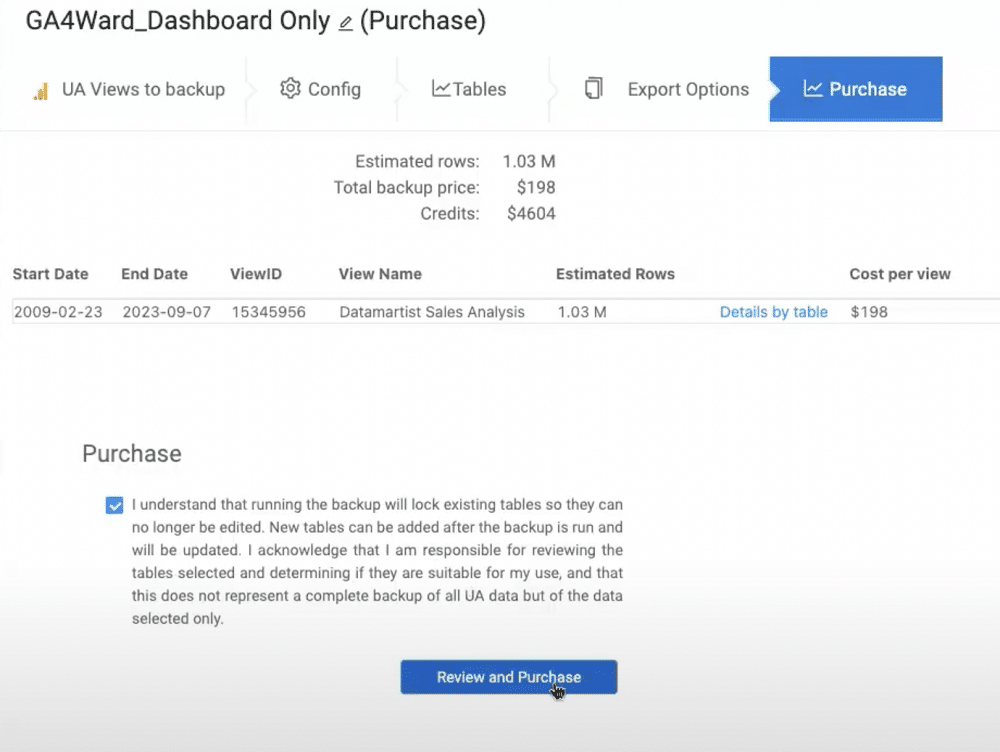

You will then be shown a library of all your tables. This is already fully configured so there’s no need for you to do anything. At this stage, you’ll also be given an estimated cost for the backup.

With Canvas, you’re guaranteed a lower range of the estimated cost on final delivery. If the final delivery is lower than the estimated cost, refunds will be sent beginning on July 1st.

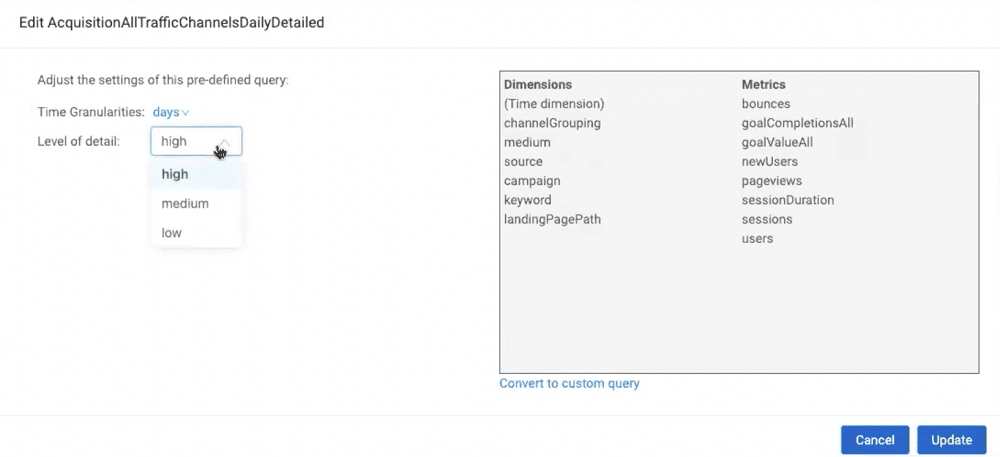

Step 8 – Choose a level of detail and granularity

From the list, you can find all the tables that line up with the UA web interface. By selecting these, you can modify the level of detail. Lower detailed tables will help to reduce the cost. You can also change the granularity of the data, ranging from days to years.

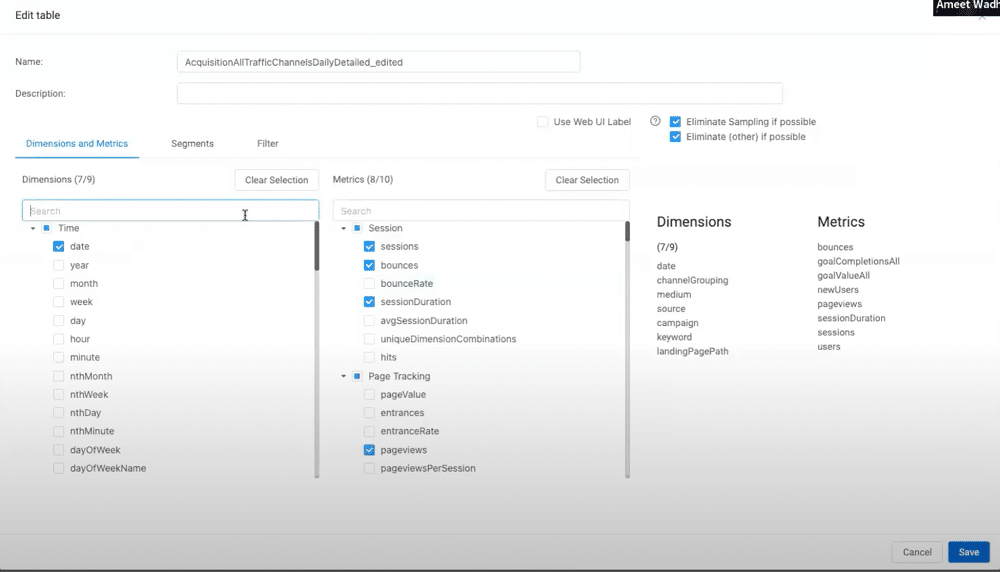

Step 9 – Add custom dimensions, variable, segments, or filters

You also have the option of converting a table to a custom query. This allows you to add custom dimensions or variables. Additionally, you can add segments or filters.

Step 10 – Select an export location

Once the configuration is complete, you can choose where you want your data to go. You can choose from either a Google Sheet, Excel (if you don’t have any dashboards or BigQuery.

You’ll then choose where you want your data to go. For instance, you’ll choose a BigQuery dataset.

Step 11 – Review and purchase

Lastly, you’ll review the terms of purchase, and if happy, accept.

Step 12 – Review your backup

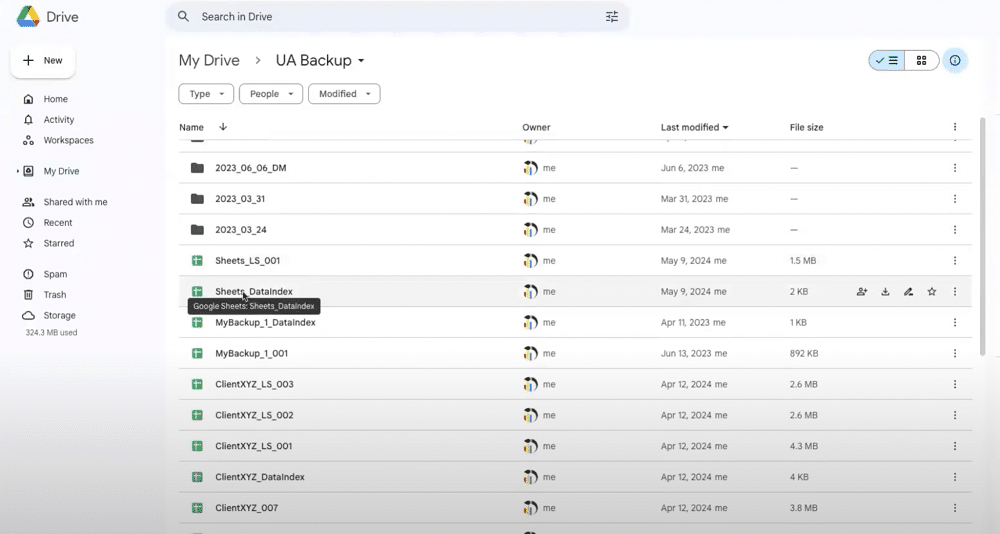

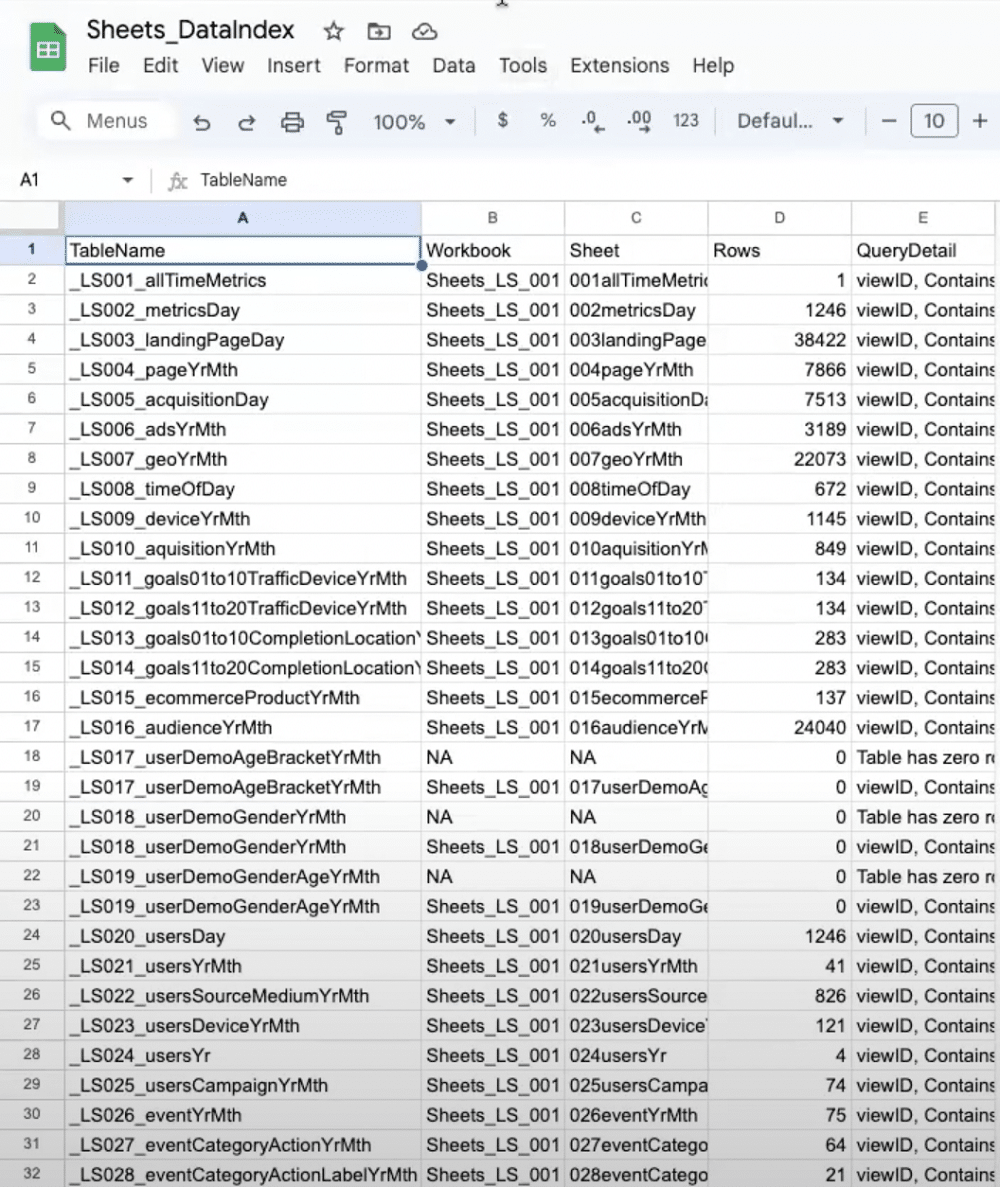

Now, let’s look at what your backup will look like once downloaded. This will be found in your Google Drive ‘UA Backup’ folder.

Within this folder, you’ll find your data index. This contains information about what is in each spreadsheet. This includes the number of tables and the number of rows.

Step 13 – Generate a report based on the backup

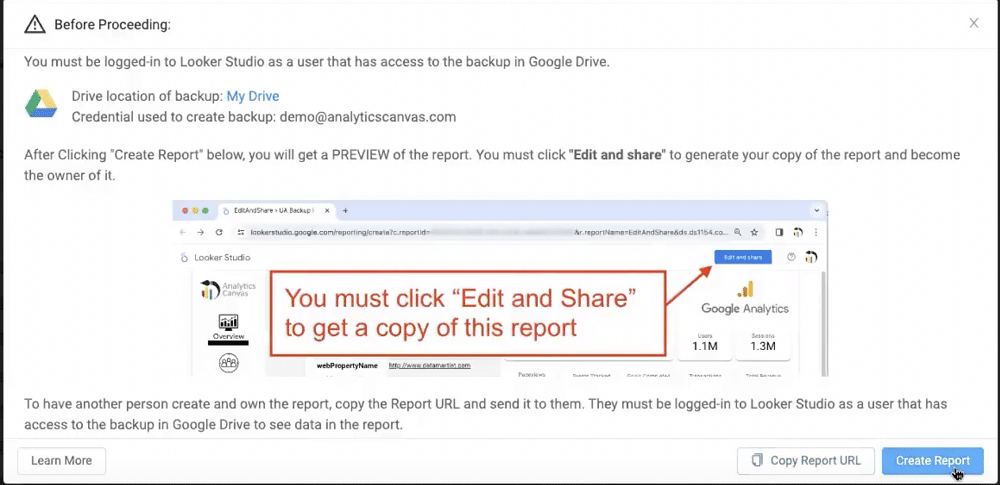

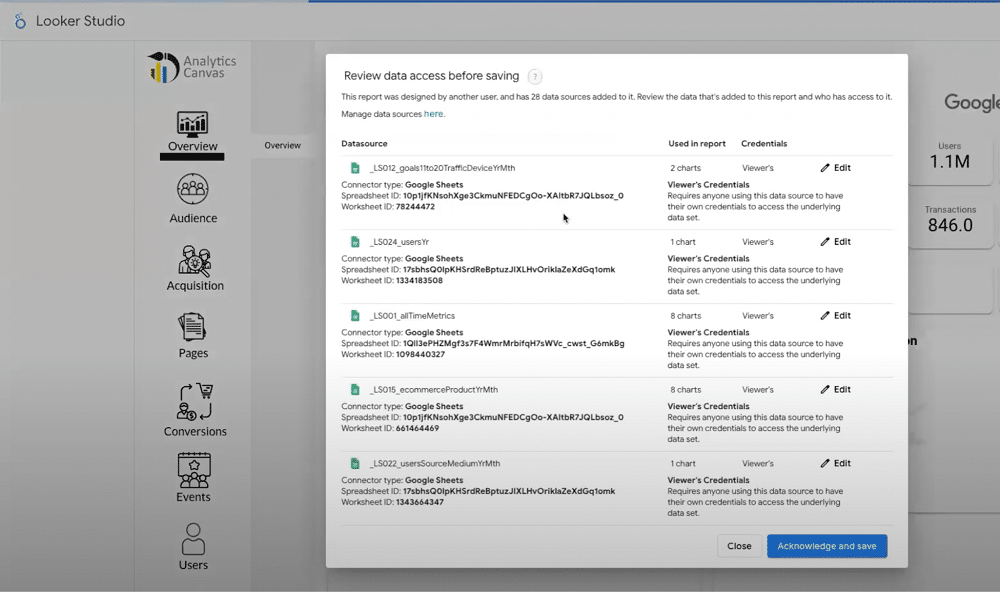

Let’s generate a report based on our backup within Looker Studio. From the ‘Create Report screen’, you also have the option of sharing a link. Simply click ‘Copy Report URL’. This enables clients to own their data.

Step 14 – Save your report

To ensure that your reports are properly saved within Looker Studio, you’ll need to select ‘Edit and save’. Make sure you don’t make any edits to your reports at this stage, as this can prevent you from owning your report.

Once complete, you’ll own your reports and can make any edits that you’d like.

Comparing report data

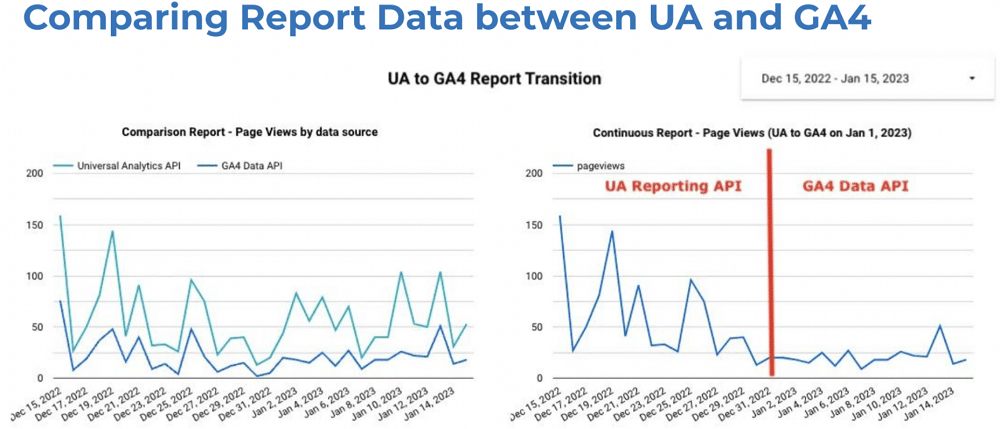

You know how to back up universal analytics data using Canvas. But what should you do once historical data is in your account? The first step should be to create blended views to compare your UA and GA4 data.

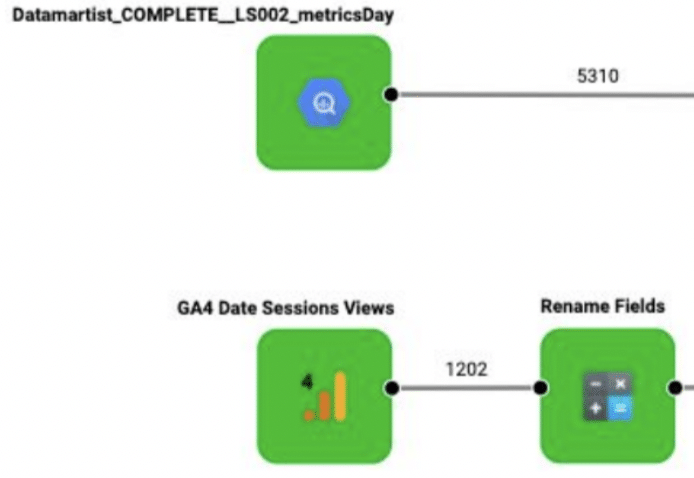

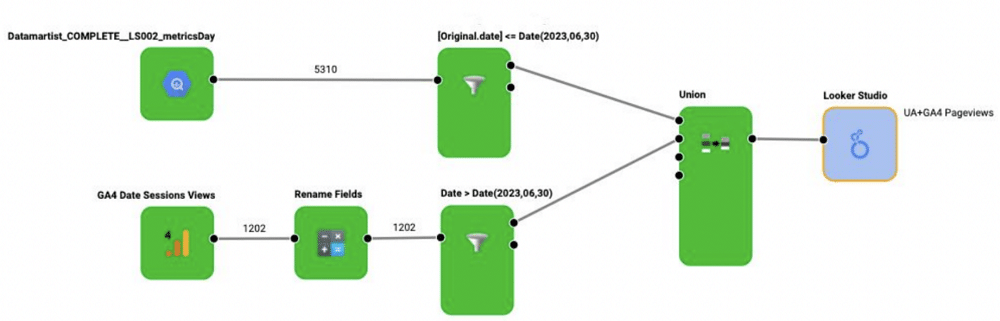

Let’s look at a classic data exercise. We’ll start with our UA data in a BigQuery table, then we’ll bring in a similar table from GA4. Alongside this, we’ll add a calculation block and rename the fields. In UA we have ‘pageviews’, in GA4, we have ‘screen pageviews’.

Next, we need to add some filters. We want the UA data to stop at the end of June 2023 and start GA4 reporting from July 1st. Once created, these need to be unioned together. This produces a single table of data structured with the columns that we need. Finally, we’ll add a Looker Studio table.

We can now start building comparison reports, spot trends, and identify differences. Directionally, GA4 and UA should be very similar. You may notice some spikes in UA traffic compared to GA4. This is likely because GA4 is much better at detecting bots.

Comparable metrics

There aren’t many comparable metrics between GA4 and UA. Google has published eight comparable categories:

- Users

- Pageviews

- Purchases

- Sessions

- Conversions

- Bounce rate

- Event Count

You can read more about these here.

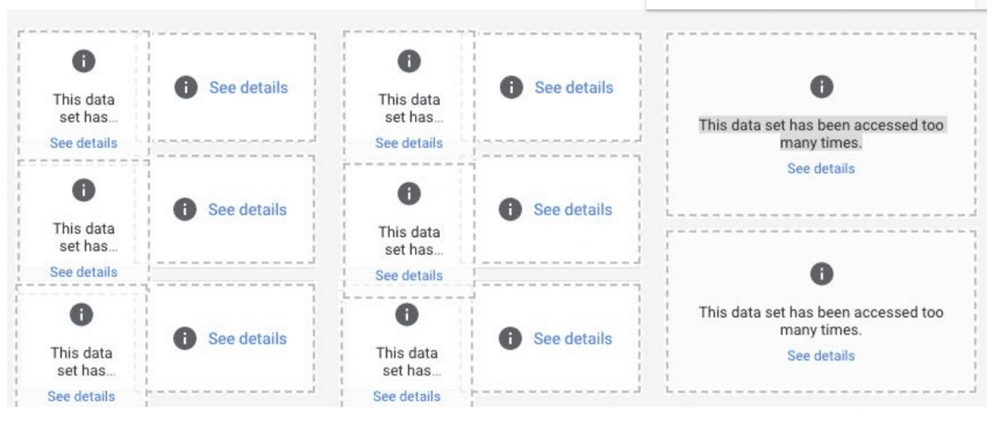

GA4 reporting challenges

After completing your blend and starting to work with GA4 API, you might start running into some limits. GA4 reporting is no longer free. If you’re using the API, you must now pay a token-based system. This was a bigger issue in 2022-23 and is now only really a problem for larger sites.

If you’re using Google Analytics with BigQuery, you’ll now be paying with dollars after your free credit expires. When handled correctly, your queries can be quite low-cost. However, if you have a big site, there may be some heavy costs.

There are also several other challenges associated with GA4 reporting. These include:

- An entirely new data model – we must put aside our learnings from UA and learn a new data model.

- Queries are harder – Knowing which queries are compatible is much more difficult, you’ll get the wrong results if you add the wrong combination of dimensions.

- Queries cost $ or tokens – If you’re doing a heavy amount of development in a day, there’s a high chance you’ll hit the token limit.

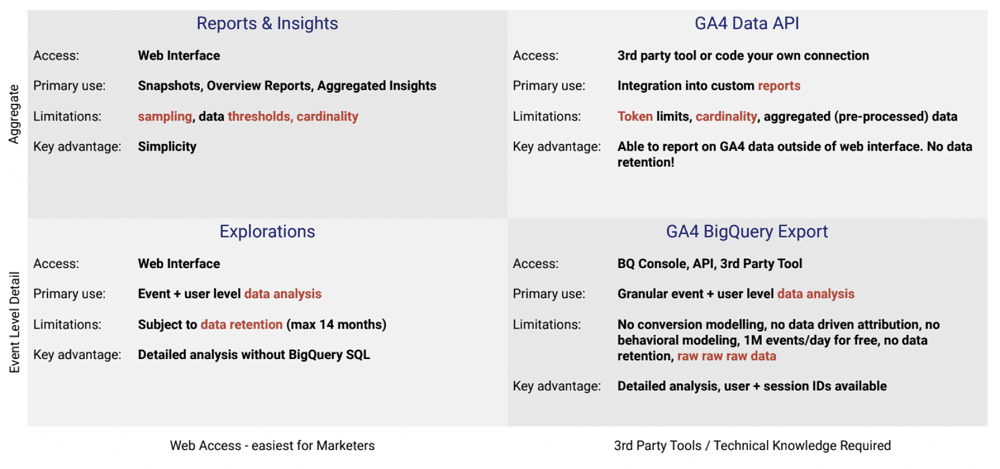

GA4 reporting surfaces

Within GA4 there are four reporting surfaces. These are shown below:

Each reporting surface is a distinct data source. Each offers various dimensions and metrics and captures different information.

Reports are the highest level and aggregate data. It doesn’t have data retention limits applied. Explorations, on the other hand, are limited to 14 months of data retention. The API feeds off reports so also has no limits, but also lacks the granular detail of BigQuery exports.

The BigQuery export has its collection mechanism and contains very rich data. The primary difference between this method and the API is that one uses ‘collected’ data and the other uses ‘connected’ data.

Connected data includes your search console data and Google Ads data. This form of data is brought into the GA4 reports via the API. This information, however, is not present in the BigQuery export. The export uses raw hits and event-level data collected by GA4 and imported to BigQuery.

Reporting using the API

In November 2022 many users will have seen the below error in their reports. Google introduced token limits to relieve pressure on its API.

To stay within token limits, bear the following points in mind:

- Don’t ask for the same data twice.

- Stage your data and keep it out of the analytics servers (in BigQuery or elsewhere).

- Load history once, then incrementally load. Every day ask for the last three days’ worth of data. This enables you to stay up-to-date with the API.

Reporting using BigQuery

The BigQuery export seems like a complex model but there are a lot of resources to help, New videos and articles are constantly arriving to make the process easier. The BigQuery export also has some great advantages.

Firstly, it’s ‘free’ you don’t need to be a 360 customer to activate the export. For most users, the costs will be zero or very low. Larger websites, however, will incur costs.

BigQuery is also the dataset that analysts have been asking for for a long time. It contains user and session IDs enabling us to create funnels and segments. Thanks to this we can better understand how users are progressing through our sites.

The export also enables us to build our segments. This isn’t a feature available using the API or BigQuery.

Finally, BigQuery is highly easy to integrate with dashboards and reports. Almost any modern tool will connect with BigQuery.

The downsides

Unfortunately, it isn’t all positive. If you handle the export poorly, such as connecting lots of raw data to a such as Looker Studio, it can be a costly exercise.

Another downside is that the export requires some knowledge of SQL. You’ll also need a strong understanding of the data model to make queries. As tools such as Dataform come out, however, this is becoming much less of a barrier.

The data is also a lot more raw than many people realise. Users have to calculate their dimensions and metrics in many cases.

Backup now!

If you don’t back up now, you’ll soon lose access to your UA data. In this article, we’ve shown how to backup Universal Analytics data. Let’s quickly recap some of the key points:

- Store your UA data in BigQuery for ease of access

- Activate the GA4 BigQuery Event Export

- Report on GA4 using both the API + Event export

- Blend UA + GA4 data to help ease the transition

About Ameet Wadhwani

Ameet Wadhwani is the Product Manager for Analytics Canvas, and has been helping agencies, consultants, and GA certified individuals to report on GA data for over 12 years. Having worked extensively with GA4 data since inception, he writes extensively on GA4 reporting challenges and solutions on the Analytics Canvas blog. You’ll often find him in the #Measure and #analytics-canvas Slack channels, as well as on LinkedIn, Facebook, and Reddit, contributing GA expertise to those struggling with reporting.

- How to Backup Universal Analytics Data Easily - 28/06/2024

- Google Analytics vs Snowplow: Should you Switch? - 28/06/2024

- Enterprise Ecommerce SEO: Strategy, Tools, Tips & More - 10/04/2024